|

|

AI has garnered significant attention and shows promise for the future. However, adopting it prematurely without robust regulations poses risks. For the legal profession, one of the most critical concerns is the phenomenon of "hallucinations" in AI outputs.

For those unfamiliar with the term, a "hallucination" in the context of AI refers to instances where the AI system generates false or misleading information that is not grounded in its training data or the provided context. Essentially, the AI "makes up" information that appears plausible but is not correct.

The reason for this persistence of hallucinations lies in the fundamental nature of generative AI. These systems are designed to fill in gaps in their knowledge with what they believe should be there. This ability to "connect the dots" and generate plausible content is what makes them so powerful, but it's also what leads to hallucinations when the connections made are incorrect.

Having exhausted my understanding/knowledge, I sought out the help of someone with a fundamental understanding of AI in general and its challenges precisely.

I had the good fortune to meet Anthony (Tony) DeSimone, CPA, CMA, President of You're the Expert Now, LLC, at this year's Managing Partner Forum in May in Atlanta. I took an instant shine to him because he could explain AI in English to me (without me having to refer to a dictionary). It did not hurt that he was a CPA.

During a recent conversation, I asked if he might co-author an article with me (I do the introduction for him, and he writes the article) on a pragmatic view of the current status of AI to address /dispel some of the current commotion. Tony graciously agreed, and the following article is the outcome of our collaboration.

In recent years, the legal profession has witnessed a significant surge in the adoption of artificial intelligence (AI) tools designed to assist with various core legal tasks. These tools, ranging from case law search and summarization to document drafting, promise to revolutionize legal practice. However, a recent study conducted by researchers from Stanford University and Yale University reveals that the integration of AI into legal research is not without its challenges, particularly when it comes to the reliability of the information generated.

Anyone closely following the developments in legal technology should not have been surprised by the results of this study. The findings confirm what many in the industry have suspected. While AI tools offer tremendous potential, they are not yet infallible and require careful oversight in their application to legal work.

Study Findings: Hallucinations in AI Legal Research Tools

The study, titled "Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools," provides the first preregistered empirical evaluation of AI-driven legal research tools. The researchers focused on products from major legal research providers, including LexisNexis (Lexis+ AI) and Thomson Reuters (Westlaw AI-Assisted Research and Ask Practical Law AI).

Key findings from the study include:

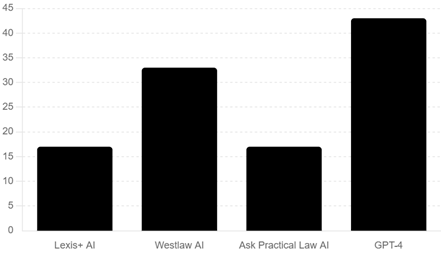

- Contrary to claims made by some providers AI legal research tools are not "hallucination-free" or completely reliable.

- The AI tools examined in the study hallucinated between 17% and 33% of the time.

- While this represents a reduction in hallucinations compared to general-purpose chatbots like GPT-4, it still poses significant risks in the high-stakes legal domain.

- There are substantial differences between systems in terms of responsiveness and accuracy.

- These findings challenge the marketing claims made by some legal research providers who have touted their methods, such as retrieval-augmented generation (RAG), as "eliminating" or "avoiding" hallucinations or guaranteeing "hallucination-free" legal citations.

Understanding Hallucinations in AI

In the legal domain, hallucinations can be particularly problematic. They include:

- Citing non-existent cases or statutes

- Misquoting or misinterpreting existing legal precedents

- Creating fictional legal principles or arguments

These errors can have severe consequences in legal practice, potentially misleading lawyers and affecting the outcome of cases.

The Current State of Generative AI in Legal Research

ChatGPT will not replace you, but the people who use it will. |

The study's findings underscore a crucial point: generative AI is not yet good enough to rely on without significant review for accuracy in the legal industry. While AI tools can be incredibly useful for tasks like initial research or generating first drafts, they cannot replace the critical thinking and expertise of human legal professionals.

It's important to note that AI's capabilities in the legal field are rapidly evolving. Given the speed at which generative AI is learning and being upgraded, we will see significant improvements in the next 18 months.

However, even with these advancements, we don't expect AI to surpass human legal experts in the near future, particularly when it comes to complex legal reasoning and judgment.

Impact on the Legal Profession

As AI continues to advance, we can expect to see dramatic changes in the job descriptions of legal professionals, particularly for new lawyers and assistant staff such as paralegals. These changes are likely to occur sooner than many in the industry anticipate.

Forward-thinking law firms are already preparing for this shift. They recognize that while ChatGPT (or similar AI tools) may not replace lawyers entirely, those who effectively use these tools will have a significant advantage. There is a new saying that captures the more likely outcome of AI's impact - "ChatGPT will not replace you, but the people who use it will."

Savvy law firms are taking proactive steps to adapt to this new reality:

- Creating an AI Use Policy: Firms are establishing clear rules and guidelines to ensure confidential information is not accidentally placed into these AI systems. These guidelines are crucial for maintaining client privacy and complying with ethical obligations.

- Training at all levels: Firms are investing in comprehensive AI training programs for all staff members, from new hires to senior partners.

- Redefining roles: New lawyers and paralegals are being trained to become "AI specialists" within their firms.

- Quality control: These AI-savvy professionals are being tasked with acting as "hallucination checkers," ensuring that all AI-generated content of consequence is appropriately cited and verified.

- Developing AI literacy: Firms are fostering a culture where understanding and effectively using AI tools is seen as a core competency for legal professionals.

The Future of Hallucinations in Legal AI

While the current state of AI in legal research is far from perfect, there is reason for optimism. As AI technology continues to advance, we expect to see a gradual reduction in the frequency and severity of hallucinations. However, it's essential to understand that hallucinations are unlikely to disappear entirely in the foreseeable future. Legal professionals should remain vigilant, especially when software representatives tout their products as "hallucination-free."

Despite this limitation, we can expect several positive developments:

- Improved accuracy: As AI models are refined and trained on larger, more diverse datasets, the overall accuracy of their outputs will improve.

- Better detection: Advanced techniques will be developed to identify potential hallucinations more effectively, allowing for easier verification.

- Increased transparency: AI systems may become better at indicating their level of certainty about different pieces of information, helping users distinguish between factual statements and more speculative content.

- Enhanced integration: AI tools will likely become more seamlessly integrated with authoritative legal databases, reducing the likelihood of citing non-existent sources.

Conclusion: Navigating the AI-Augmented Legal Landscape

Hallucinations will continue to be a challenge for law firms using generative AI for the foreseeable future. However, the frequency and impact of these errors are expected to decrease over time. In the meantime, here are the steps we suggest law firms can take to mitigate risks and maximize the benefits of AI:

- Invest in training: Ensure that all staff members, especially new hires, are well-versed in both the capabilities and limitations of AI tools.

- Implement verification processes: Establish robust procedures for checking AI-generated content, with a particular focus on verifying citations and critical legal arguments.

- Foster AI literacy: Encourage a culture where AI is seen as a powerful tool to augment human expertise, not replace it.

- Stay informed: Keep abreast of the latest developments in legal AI, including improvements in accuracy and new features that can help detect or prevent hallucinations.

- Maintain ethical standards: Ensure that the use of AI in legal practice aligns with professional ethical obligations, including the duty to provide competent representation.

By taking these steps, law firms can position themselves to harness the power of AI while mitigating its risks. The future of legal practice will likely involve a symbiotic relationship between human expertise and AI capabilities. Those who can effectively navigate this new landscape, leveraging AI's strengths while compensating for its weaknesses, will be well-positioned to thrive in the evolving legal industry.

As we move forward, ongoing research and open dialogue about the capabilities and limitations of AI in legal contexts will be crucial. By maintaining a balanced perspective – neither overly reliant on AI nor dismissive of its potential – the legal profession can work towards a future where AI enhances, rather than undermines, the quality and efficiency of legal services. The future is bright!

Who is Tony DeSimone?

As a seasoned Certified Public Accountant (CPA) and Certified Managerial Accountant (CMA) with over 23 years of experience, he is dedicated to the prosperity and advancement of small businesses, encompassing both the solid foundations of accounting and the innovative realms of artificial intelligence.

Alongside offering a comprehensive array of services that uphold expert financial guidance, He is at the forefront of integrating ChatGPT and other AI technologies to boost efficiency, safeguard sensitive data, and enhance profitability. This dual approach not only keeps businesses ahead but also reflects his journey towards attaining a 'ChatGPhD' to master AI in the business context and to guide business executives on their path to achieving their own.

My enthusiasm for helping small businesses and individuals achieve their ambitions is matched by my commitment to innovation. Integrating AI into my consultancy services not only offers novel solutions to old problems but also signifies my role in assisting executives to navigate their journeys towards AI proficiency, including the pursuit of their 'ChatGPhD.'

My path has led me from beginnings at Deloitte to significant roles such as CFO, COO, President, and trusted adviser, where he has consistently aimed to transform chaos into order. Beyond consultancy, his engagement as an adjunct professor and executive director at the State University of New York at Buffalo, along with involvement with the University at Buffalo Center for Entrepreneurial Leadership (CEL), underscores his dedication to fostering a learning and innovation community.

Tony can be reached by email at tony@youretheexpertnow.com or by telephone at 716.912.1982.

Who is Stephen Mabey?

Stephen Mabey is a CPA, CA, and the Managing Director of Applied Strategies, Inc. His credentials include:

- Fellow of the College of Law Practice Management (one of 19 Canadians - 276 Fellows),

- Author of Leading and Managing a Sustainable Law Firm: Tactics and Strategies for a Rapidly Changing Profession, and Key Performance Indicators An Introductory Guide (Amazon),

- Over 25 years in a senior management role with Stewart McKelvey, a 220-lawyer, six-office Atlantic Canadian law firm,

- Over 15 years experience providing advice and counsel to small to mid-size law firms on a broad range of issues,

- A panelist and facilitator of the Managing Partner Information Exchange ("MPIE") at the annual Managing Partner Forum Leadership Conference held in Atlanta, Georgia, each May, and

- has a growing mailing list that circulates articles, directly and indirectly, impacting law firms and offers free mini-benchmarking surveys.

Stephen has advised law firms for over 15 years on a wide range of issues, including - strategic action planning, leadership, understudy (succession) planning, compensation (Partner and Associate), organizational structures and partnership arrangements, governance, business development, capitalization of partnerships, partnership agreements, lawyer and staff engagement, marketing, key performance indicators, competitive intelligence, finance, mergers, and practice transitions.

The accurate perspective on what we do for our clients comes not from what we say we are about but from what they say about us. His clients use phrases to describe him, such as:

- "His advice and the solutions were tailored to our unique needs and business context";

- "It's as though he "got us" right away and didn't waste our time (or our money) on the unnecessary stuff";

- "Stephen was invaluable in helping us steer through a difficult time of transition within our firm";

- "He has helped our firm immensely over the past number of years, and we are most appreciative of his contribution; it's like having a CEO on retainer";

- "Steve possesses that rare ability to bluntly deliver tough and practical business advice while maintaining his personal warmth and good humor";

- "Stephen has been invaluable in assisting with and guiding us through our strategic planning process. His broad knowledge and experience, combined with his engaging and inclusive facilitation style, promotes thoughtful and productive discussion among our partners "and

- "Steve always pushes the leading edge in terms of new ideas and new ways to carry on business."

Stephen can be reached by email – at smabey@appliedstrategies.ca or by phone at 902.499.3895.